Hi, I am referring code from Version 1.3 of the scheduler which can be directly accessed from this github link.

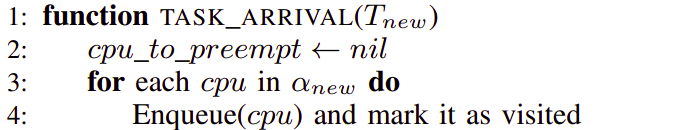

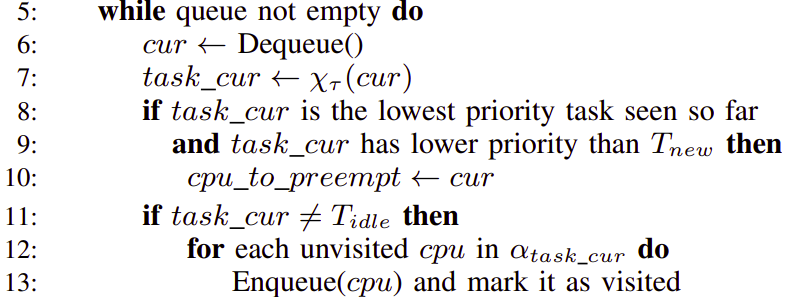

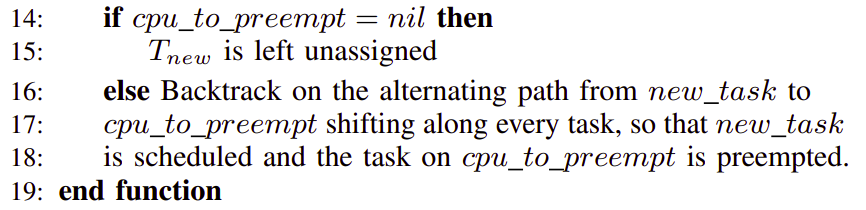

During this post, Paper refers to this paper, and Algorithm 1 (Page 5 of paper) refers to the algorithm for task arrival.

The post covers the following three topics for this function:

- What this function does

- Reason behind selecting this function for implementing Algorithm 1

- Explanation of the code and design choices

Section 1: What this function does

Enqueue function of a scheduler is called to enqueue a node either in scheduled or in ready queue. So, this would correspond to algorithm 1 from our paper which is the algorithm for a task arrival.

Section 2: Reason for selecting this function

Following are some of the important places that _Scheduler_strong_APA_Enqueue is called (and to some extent justifies our selection of the function as the place of implementation of Algorithm 1) :

- In Scheduler_SMP_Unblock where a node is unblocked. So the node changes its state from BLOCKED to either READY or SCHEDULED based on whether the scheduler allocates a processor for it or not. The node asks for help (learn more here) in case it doesn’t get scheduled and is put in the ready queue.

- In Scheduler_SMP_Update. This function is used to update the priority of a node, in case the node was in ready state, there is a chance that the new priority might make it eligible to be scheduled on a processor. Hence Enqueue function is called.

Section 3: Explaining the code

The function is divided in the following three parts:

- Initializing the data structures needed for carrying out the Algorithm 1

- Finding the lowest scheduled node that would be preempted (if has lower priority than the node looking to get enqueued)

- Backtracking to preempt the lowest scheduled node

Initializing the data structures

We traverse through all the cpu present in the system and put the ones which the node has an affinity to in the queue by inreasing the value of rear. Since the size of the array Struct is (proof in permalink) as big as the CONFIGURE_MAXIMUM_PROCESSORS, the queue would never overflow.

//Denotes front and rear of the queue

uint32_t front;

uint32_t rear;

front = 0;

rear = -1;

self = _Scheduler_strong_APA_Get_self( context );

Struct = self->Struct;

strong_node = _Scheduler_strong_APA_Node_downcast( node );

cpu_max = _SMP_Get_processor_maximum();

for ( cpu_index = 0 ; cpu_index < cpu_max ; ++cpu_index ) {

Struct[ cpu_index ].visited = false;

//Checks if the thread_CPU is in the affinity set of the node

if ( _Processor_mask_Is_set( &strong_node->Affinity, cpu_index) ) {

Per_CPU_Control *cpu = _Per_CPU_Get_by_index( cpu_index );

if ( _Per_CPU_Is_processor_online( cpu ) ) {

rear = rear + 1;

Struct[ rear ].cpu = cpu;

Struct[ cpu_index ].visited = true;

Struct[ cpu_index ].caller = node;

}

}

}

Corresponding link to code

We achieve queue like effect in the array Struct by increasing the value of rear every time we insert an element in the array and we access the element using front, which is also increased each time we access the front of the queue.

Finding the lowest scheduled node

The basic idea is to find the lowest reachable task. Any task is reachable if it is executing on any of the cpu in the affinity set of the newly arrived task or on the cpu in affinity of a task which is executing on a cpu which is in the affinity of the newly arrived task or on the cpu whi … (You get the idea :p, If not open this and you’d get to see a basic example where a task is getting shifted).

while( front <= rear ) {

curr_CPU = Struct[ front ].cpu;

front = front + 1;

curr_thread = curr_CPU->heir;

curr_node = _Thread_Scheduler_get_home_node( curr_thread );

curr_priority = _Scheduler_Node_get_priority( curr_node );

curr_priority = SCHEDULER_PRIORITY_PURIFY( curr_priority );

curr_strong_node = _Scheduler_strong_APA_Node_downcast( curr_node );

if ( curr_priority > max_priority_num ) {

lowest_reachable = curr_node;

max_priority_num = curr_priority;

*cpu_to_preempt = curr_CPU;

}

Corresponding link to code

If the current node scheduled on the cpu which is in front of the queue has the lowest priority seen so far (i.e. the highest priority number), we mark this node as the lowest_reachable node and save its cpu in the cpu_to_preempt variable (Here since the cpu_to_preempt is a double pointer, it’s value is the address to the pointer that points to the Per_CPU_Control of cpu in front of queue. This value of the pointer is the value stored in cpu_to_preempt in calling Enqueue function since it was passed by reference),

if ( !curr_thread->is_idle ) {

for ( cpu_index = 0 ; cpu_index < cpu_max ; ++cpu_index ) {

if ( _Processor_mask_Is_set( &curr_strong_node->Affinity, cpu_index ) ) {

//Checks if the thread_CPU is in the affinity set of the node

Per_CPU_Control *cpu = _Per_CPU_Get_by_index( cpu_index );

if ( _Per_CPU_Is_processor_online( cpu ) && Struct[ cpu_index ].visited == false ) {

rear = rear + 1;

Struct[ rear ].cpu = cpu;

Struct[ cpu_index ].visited = true;

Struct[ cpu_index ].caller = curr_node;

}

}

}

}

}

return lowest_reachable;

Corresponding link to code

If the task is not an idle task, then we could check the task executing on the cpu in its affinity set to check for a possible lowest priority reachable task, thus we put its cpu in the end of the queue. caller here is used to indicate that this node is responsible for calling the cpu (putting it in the queue), so later while backtracking, this node should preempt this cpu (since all the task preempt the cpu that they put on the queue). A node may enqueue more than one cpu (since a task has more than 1 cpu in its affinity set) but would only preempt one cpu, the cpu that it would preempt is decided by setting the invoker variable (coming up later in this post) while backtracking from cpu_to_preempt.

Backtracking to preempt the lowest scheduled node

cpu_to_preempt would stay nil in the case we could not find any node with a priority lower than the newly arrived task, in which case the latter would be put in a ready queue and would ask for help.

if( lowest_priority > node_priority ) {

curr_node = Struct[ _Per_CPU_Get_index(cpu_to_preempt) ].caller;

curr_strong_node = _Scheduler_strong_APA_Node_downcast( curr_node );

curr_strong_node->invoker = cpu_to_preempt;

//Save which cpu to preempt in invoker value of the node

while( curr_node != node ) {

curr_CPU = _Thread_Get_CPU( curr_node->user );

curr_node = Struct[ _Per_CPU_Get_index( curr_CPU ) ].caller;

curr_strong_node = _Scheduler_strong_APA_Node_downcast( curr_node );

curr_strong_node->invoker = curr_CPU;

}

Corresponding link to code

In order to find the right set of node and cpu’s to preempt, we start backtracking from cpu_to_preempt by looking at the caller node of the cpu_to_preempt and save the cpu in the caller node’s invoker. This invoker value is used to preempt the cpu.

We start from node which calls the cpu_to_preempt, that is the caller of cpu_to_preempt (the node which put the cpu_to_preempt which has the lowest scheduled task executing on it) since it would be the node which would actually preempt cpu_to_preempt since it is in the affinity set of the node and then we get the cpu of the node which was caller of cpu_to_preempt and we find its caller and so on.

next_thread = curr_strong_node->invoker->heir;

next_node = _Thread_Scheduler_get_home_node( next_thread );

node_priority = _Scheduler_Node_get_priority( curr_node );

node_priority = SCHEDULER_PRIORITY_PURIFY( node_priority );

_Scheduler_SMP_Enqueue_to_scheduled(

context,

curr_node,

node_priority,

next_node,

_Scheduler_SMP_Insert_scheduled,

_Scheduler_strong_APA_Move_from_scheduled_to_ready,

_Scheduler_strong_APA_Allocate_processor

);

curr_node = next_node;

curr_strong_node = _Scheduler_strong_APA_Node_downcast( curr_node );

while( curr_node != lowest_reachable) {

next_thread = curr_strong_node->invoker->heir;

next_node = _Thread_Scheduler_get_home_node( next_thread );

//curr_node preempts the next_node;

_Scheduler_SMP_Preempt(

context,

curr_node,

next_node,

_Scheduler_strong_APA_Allocate_processor

);

curr_node = next_node;

curr_strong_node = _Scheduler_strong_APA_Node_downcast( curr_node );

}

_Scheduler_strong_APA_Move_from_scheduled_to_ready(context, lowest_reachable);

needs_help = false;

} else {

needs_help = true;

}

Corresponding link to code

Here we start preempting the cpu from the node(the newly arrived node) until just before the caller of cpu_to_preempt and we save the invoker of the node that we are preempting, so that the node which gets preempted knows which node it is supposed to preempt and so on.

The very first preemption (From newly arrived node to the cpu which it called) is done via _SMP_Enqueue_to_Scheduled

Finally we preempt the lowest_reachable node with its caller and remove it from the list of scheduled_node maintained by SMP scheduler in its context be calling move_from_scheduler_to_ready.

needs_help is returned true if the lowest reachable node has a higher priority than our current newly arrived node, in which case the node is put in ready queue and asks for help.

Hope you enjoyed the explanation,

Thanks!